Responsible AI - Explainable AI - Fairness & Bias - Deep Learning - Convolution Neural Networks - Python - Transfer Learning - Fine Tuning - Hyper Parameter Tweaking - Tensorflow - Keras - Prototyping - U/X Testing - Responsible AI - Explainable AI - Fairness & Bias - Deep Learning - Convolution Neural Networks - Python - Transfer Learning - Fine Tuning - Hyper Parameter Tweaking - Tensorflow - Keras - Prototyping - U/X Testing - Responsible AI - Explainable AI - Fairness & Bias - Deep Learning - Convolution Neural Networks - Python - Transfer Learning - Fine Tuning - Hyper Parameter Tweaking - Tensorflow - Keras - Prototyping - U/X Testing -

This prototype application contains my image classification algorithm using deep neural networks. This algorithm combines several significant features, including enhanced fairness, transparency, explainability (XAI), and less bias. This application's use case is to teach AI specialists how to utilize deep learning while keeping those important factors in mind, particularly for image recognition.

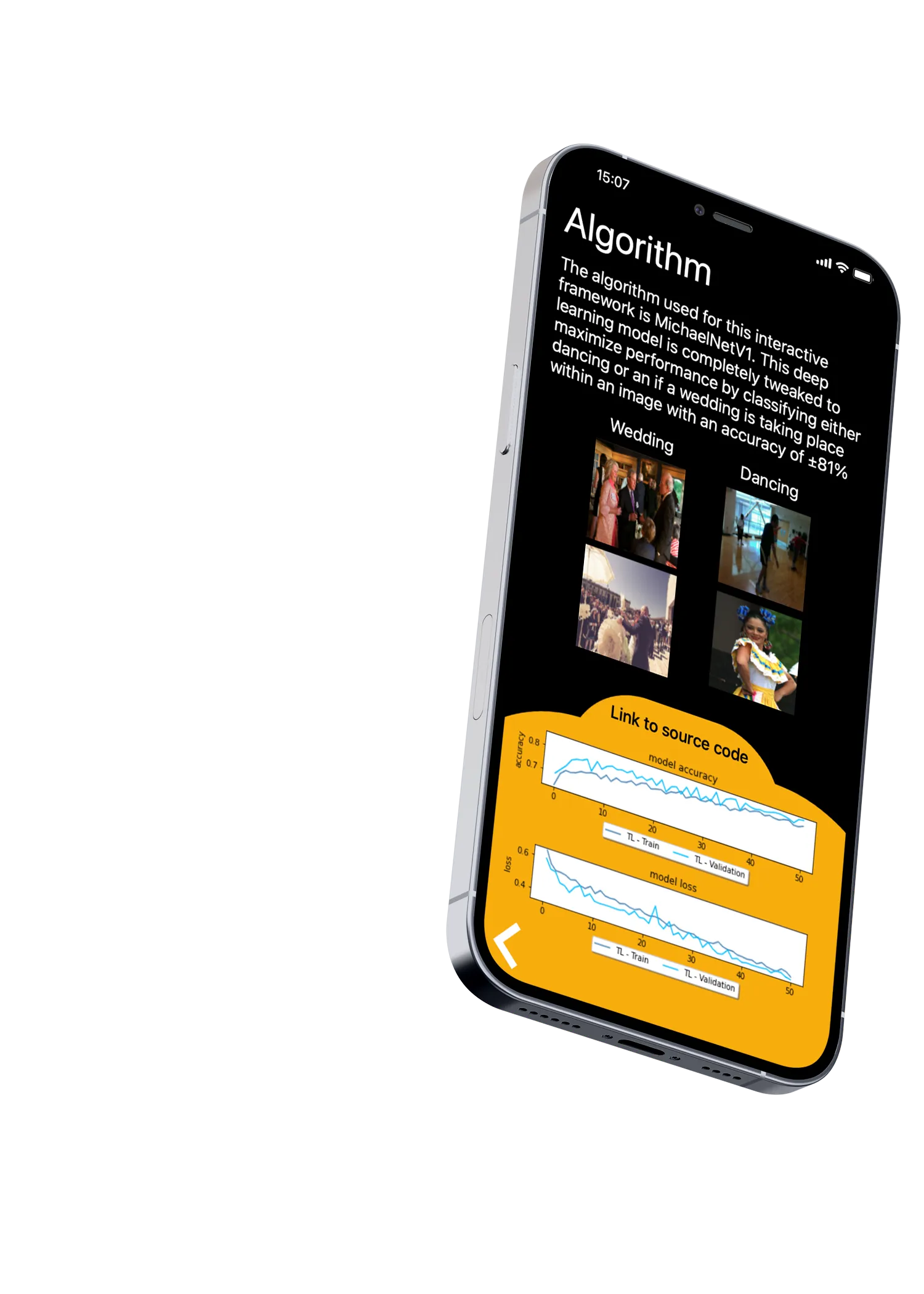

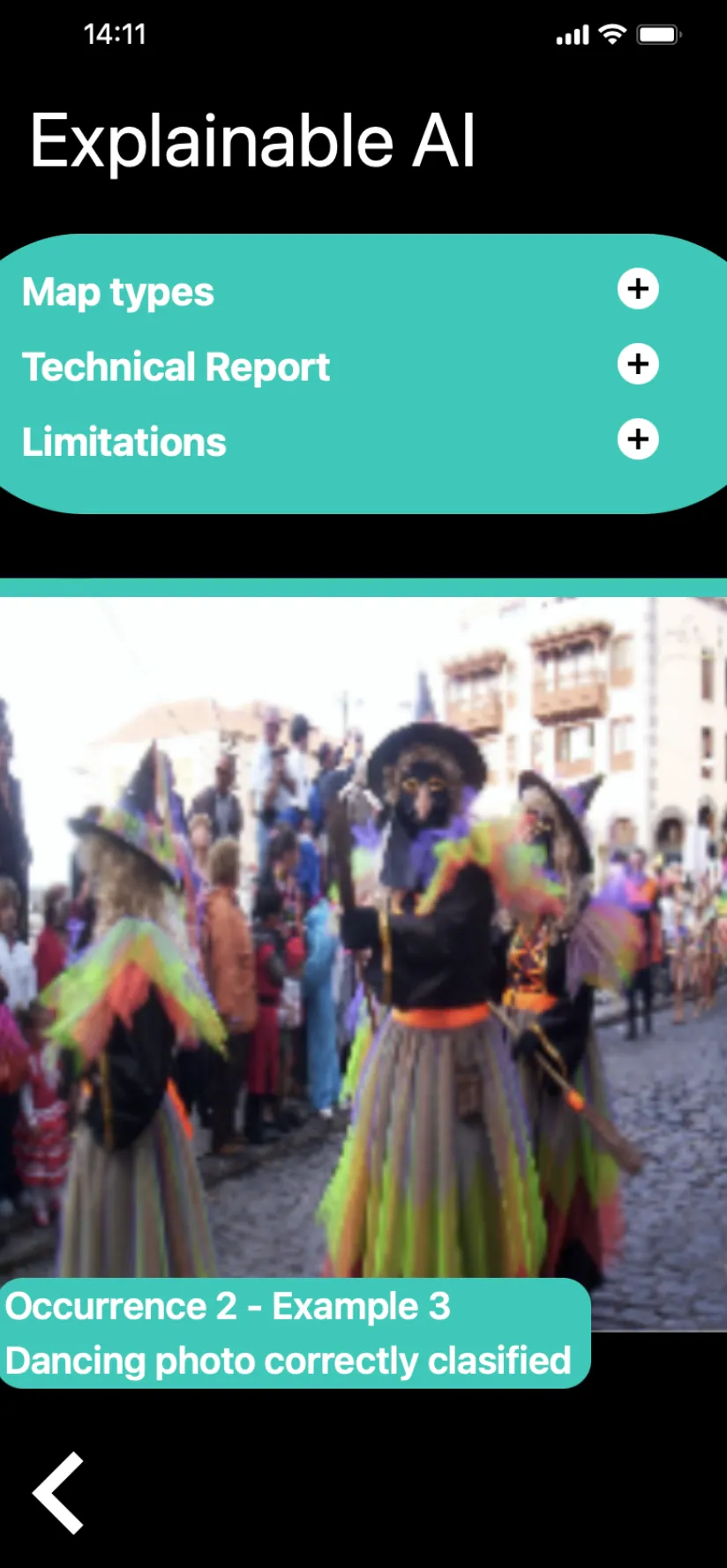

To showcase the app, I have created a demonstration that contains three different screens within the app. This demonstration can be found under this text. I adopted the "design thinking" methodology to produce a human-centered design. In addition to that, I have also conducted A/B testing to ensure the finest user experience.

Explainable AI & Responsible AI are rapidly making an appearance. To combat discriminatory behavior in machine learning, more and more organizations are giving these topics top priority in putting them into practice.

So, in this section of the app, I tried to criticize my own model from the perspective of the topic of explainable AI. This was achieved by analyzing my Grad-CAM visuals.

In terms of the Grad-CAM maps, my model classifies the images by focusing on the background at times. When the background is dark, it is more likely to be a dancing image. When the background is brighter, and it contains greenery or skies, the model is more likely to classify it as a wedding image. My model also takes other features into account such as clothes. In my opinion, this is a more reliable way to determine the class. The model interpretability is further explained using some examples below.

In the images above you can see that my model focuses on the background.

Those are NOT always reliable features in terms of responsible Al.

In the images above you can see that my model focuses on the clothing, the couples/dancers are wearing. It also focuses on the lightning effects highlighted on the dance floor (second picture). My model classified those images correctly.

Those are GOOD features in terms of responsible Al.

I hope that you now have a deeper insight into why explainable AI is so essential. Deep Learning is often considered a "black box" and a challenging concept. The process by which your model comes to certain conclusions is extremely complicated and occasionally thought to be unknown. By dismantling this "black box" using innovative techniques, you can explain to your users or customers how your artificial intelligence (AI) behaves.

The algorithm used for this app falls under a convolutional neural network (CNN). This algorithm is perfectly tuned to distinguish either dancing or wedding images. As was stated earlier on this page, the use-case for this model is to teach AI experts how to utilize deep learning in a responsible and transparent manner. To refine the model even more for this goal, transfer learning and hyper parameter tweaking have been applied & tested. The model has been developed in Python using Tensorflow/Keras.